구글 코랩 시작하기

본 포스팅은 제가 대학원 수업 조교를 하며 colab사용법과 딥러닝과 Keras 툴킷의 기본을 설명한 포스팅입니다. 추가적으로 음성파일에 대한 mfcc추출 등도 내용에 있습니다.

사양확인

!cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU @ 2.30GHz

stepping : 0

microcode : 0x1

cpu MHz : 2300.000

cache size : 46080 KB

physical id : 0

siblings : 2

core id : 0

cpu cores : 1

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm pti ssbd ibrs ibpb stibp fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms xsaveopt arat arch_capabilities

bugs : cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf

bogomips : 4600.00

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

power management:

processor : 1

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU @ 2.30GHz

stepping : 0

microcode : 0x1

cpu MHz : 2300.000

cache size : 46080 KB

physical id : 0

siblings : 2

core id : 0

cpu cores : 1

apicid : 1

initial apicid : 1

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm pti ssbd ibrs ibpb stibp fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms xsaveopt arat arch_capabilities

bugs : cpu_meltdown spectre_v1 spectre_v2 spec_store_bypass l1tf

bogomips : 4600.00

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

power management:

!cat /proc/meminfo

MemTotal: 13335212 kB

MemFree: 8513476 kB

MemAvailable: 11822980 kB

Buffers: 127904 kB

Cached: 3096336 kB

SwapCached: 0 kB

Active: 1325920 kB

Inactive: 3094080 kB

Active(anon): 945144 kB

Inactive(anon): 5448 kB

Active(file): 380776 kB

Inactive(file): 3088632 kB

Unevictable: 0 kB

Mlocked: 0 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 1028 kB

Writeback: 0 kB

AnonPages: 1195632 kB

Mapped: 514604 kB

Shmem: 5968 kB

Slab: 203064 kB

SReclaimable: 163508 kB

SUnreclaim: 39556 kB

KernelStack: 3872 kB

PageTables: 8772 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 6667604 kB

Committed_AS: 2970144 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 0 kB

VmallocChunk: 0 kB

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

DirectMap4k: 167924 kB

DirectMap2M: 6123520 kB

DirectMap1G: 9437184 kB

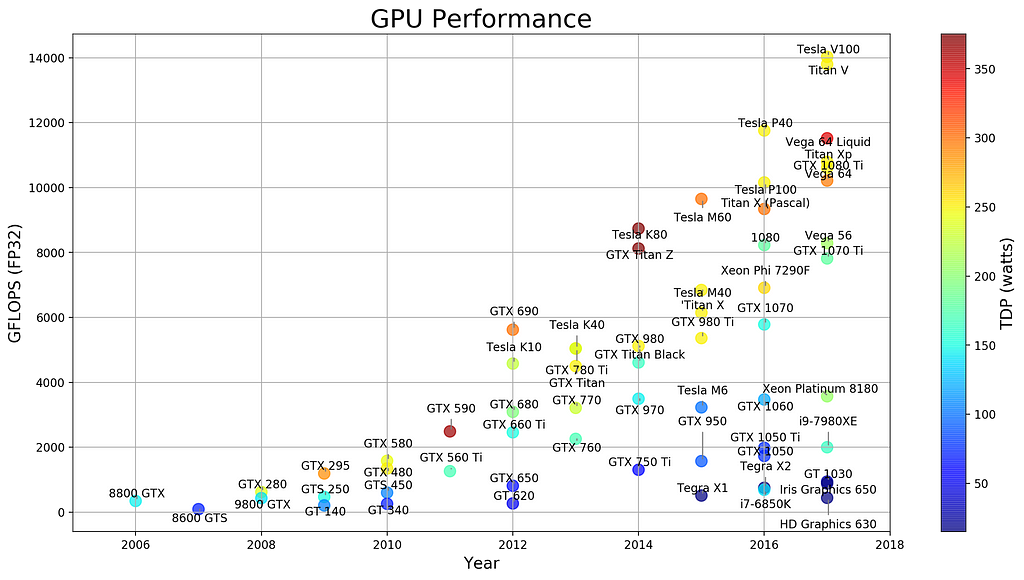

!nvidia-smi

Wed Nov 28 06:03:48 2018

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 396.44 Driver Version: 396.44 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla K80 Off | 00000000:00:04.0 Off | 0 |

| N/A 36C P0 69W / 149W | 202MiB / 11441MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

Library 설치

- numpy : Numpy는 파이썬이 계산과학분야에 이용될때 핵심 역할을 하는 라이브러리입니다. Numpy는 고성능의 다차원 배열 객체와 이를 다룰 도구를 제공합니다.

- Tensorflow : Google이 개발/배포하는 딥러닝용 라이브러리

- Keras : Tensorflow를 추상화하여 더욱 쉽게 사용할 수 있도록 하는 라이브러리

- librosa : Audio와 관련된 다양한 feature extraction, Visualization 등을 제공

!pip install numpy keras librosa matplotlib

Requirement already satisfied: numpy in /usr/local/lib/python2.7/dist-packages (1.14.6)

Requirement already satisfied: keras in /usr/local/lib/python2.7/dist-packages (2.2.4)

Requirement already satisfied: librosa in /usr/local/lib/python2.7/dist-packages (0.6.2)

Requirement already satisfied: matplotlib in /usr/local/lib/python2.7/dist-packages (2.1.2)

Requirement already satisfied: keras-applications>=1.0.6 in /usr/local/lib/python2.7/dist-packages (from keras) (1.0.6)

Requirement already satisfied: scipy>=0.14 in /usr/local/lib/python2.7/dist-packages (from keras) (1.1.0)

Requirement already satisfied: keras-preprocessing>=1.0.5 in /usr/local/lib/python2.7/dist-packages (from keras) (1.0.5)

Requirement already satisfied: h5py in /usr/local/lib/python2.7/dist-packages (from keras) (2.8.0)

Requirement already satisfied: pyyaml in /usr/local/lib/python2.7/dist-packages (from keras) (3.13)

Requirement already satisfied: six>=1.9.0 in /usr/local/lib/python2.7/dist-packages (from keras) (1.11.0)

Requirement already satisfied: decorator>=3.0.0 in /usr/local/lib/python2.7/dist-packages (from librosa) (4.3.0)

Requirement already satisfied: joblib>=0.12 in /usr/local/lib/python2.7/dist-packages (from librosa) (0.13.0)

Requirement already satisfied: audioread>=2.0.0 in /usr/local/lib/python2.7/dist-packages (from librosa) (2.1.6)

Requirement already satisfied: numba>=0.38.0 in /usr/local/lib/python2.7/dist-packages (from librosa) (0.40.1)

Requirement already satisfied: scikit-learn!=0.19.0,>=0.14.0 in /usr/local/lib/python2.7/dist-packages (from librosa) (0.19.2)

Requirement already satisfied: resampy>=0.2.0 in /usr/local/lib/python2.7/dist-packages (from librosa) (0.2.1)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python2.7/dist-packages (from matplotlib) (0.10.0)

Requirement already satisfied: backports.functools-lru-cache in /usr/local/lib/python2.7/dist-packages (from matplotlib) (1.5)

Requirement already satisfied: subprocess32 in /usr/local/lib/python2.7/dist-packages (from matplotlib) (3.5.3)

Requirement already satisfied: pytz in /usr/local/lib/python2.7/dist-packages (from matplotlib) (2018.7)

Requirement already satisfied: python-dateutil>=2.1 in /usr/local/lib/python2.7/dist-packages (from matplotlib) (2.5.3)

Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /usr/local/lib/python2.7/dist-packages (from matplotlib) (2.3.0)

Requirement already satisfied: funcsigs in /usr/local/lib/python2.7/dist-packages (from numba>=0.38.0->librosa) (1.0.2)

Requirement already satisfied: enum34 in /usr/local/lib/python2.7/dist-packages (from numba>=0.38.0->librosa) (1.1.6)

Requirement already satisfied: llvmlite>=0.25.0dev0 in /usr/local/lib/python2.7/dist-packages (from numba>=0.38.0->librosa) (0.25.0)

Requirement already satisfied: singledispatch in /usr/local/lib/python2.7/dist-packages (from numba>=0.38.0->librosa) (3.4.0.3)

import os

디렉토리 내용 확인

os.listdir('./')

['.config', 'sample_data', 'drive']

Google drive 마운트

인증을 통해서 google drive마운트하기

from google.colab import drive

drive.mount('/content/drive')

Mounted at /content/drive

os.listdir('/content/drive/My Drive/Colab Notebooks')

['Untitled1.ipynb',

'Untitled0.ipynb',

'Untitled2.ipynb',

'Untitled3.ipynb',

'category',

'Untitled',

'Untitled4.ipynb',

'\xe1\x84\x89\xe1\x85\xb5\xe1\x86\xaf\xe1\x84\x89\xe1\x85\xb3\xe1\x86\xb8\xe1\x84\x89\xe1\x85\xb5\xe1\x86\xaf\xe1\x84\x89\xe1\x85\xb3\xe1\x86\xb8.ipynb']

import librosa

import numpy as np

import librosa.display

from matplotlib import pyplot as plt

%matplotlib inline

librosa example audio를 이용한 data visualization

!apt-get install -y -qq ffmpeg

y, sr = librosa.load(librosa.util.example_audio_file(), duration=10)

y:np.ndarray [shape=(n,) or (2, n)]

audio time series

sr:number > 0 [scalar]

sampling rate of y

Sampling rate 확인

sr

22050

Audio time series와 dimension 확인

print(y,y.shape)

(array([ 0. , 0. , 0. , ..., -0.07654281, -0.0804726 , -0.09472633], dtype=float32), (220500,))

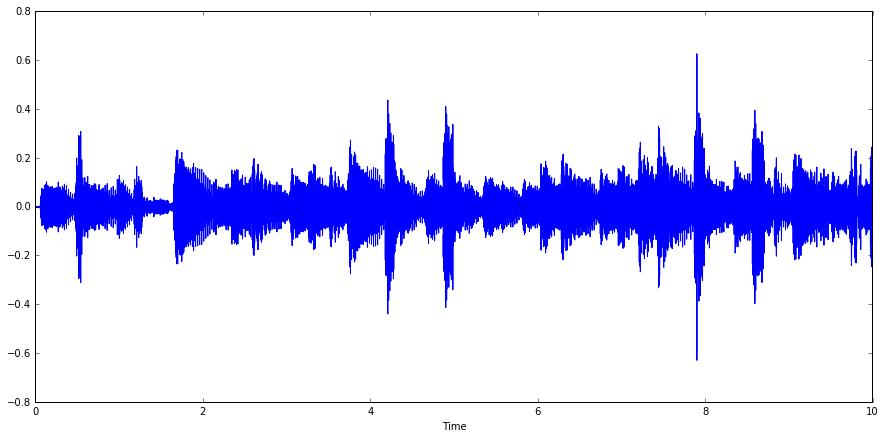

plt.figure(figsize=(15,7))

librosa.display.waveplot(y,sr=sr)

<matplotlib.collections.PolyCollection at 0x7f342b54c490>

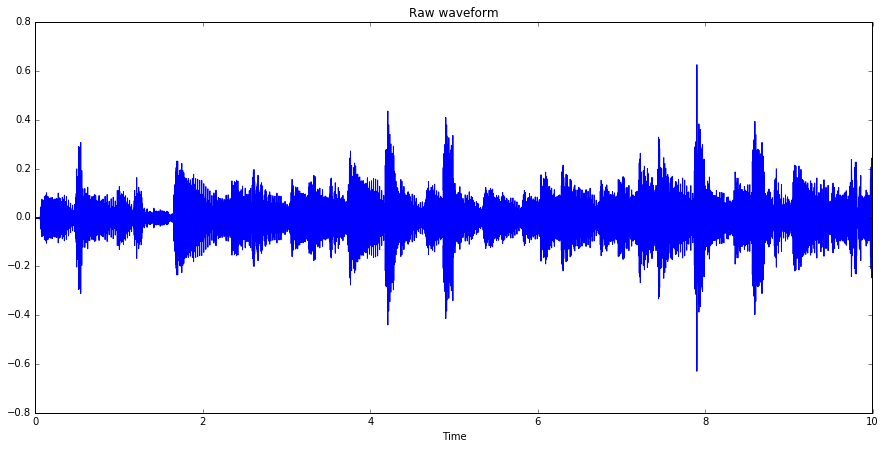

plt.figure(figsize=(15,7))

librosa.display.waveplot(y,sr=sr)

plt.title('Raw waveform')

plt.show()

import IPython.display as ipd

ipd.Audio(y,rate=sr)

Sample 파일 MFCC 추출

reference : https://librosa.github.io/librosa/generated/librosa.feature.mfcc.html#librosa-feature-mfcc

mfcc = librosa.feature.mfcc(y=y, sr=sr)

mfcc

array([[-585.74258221, -536.98026502, -420.33729707, ..., -312.36725669, -294.1104376 , -296.64193221],

[ 0. , 60.42567623, 147.08731492, ..., 100.49875748, 98.6326375 , 129.67881767],

[ 0. , 41.15048816, 35.22410725, ..., 67.2011451 , 74.83373746, 78.34393586],

...,

[ 0. , -9.40651646, -5.60343754, ..., 6.0100009 , 5.52348393, 2.21866423],

[ 0. , -7.86510647, -5.9375782 , ..., 3.39014456, 4.68482683, 8.28399921],

[ 0. , -4.03834951, -4.45187158, ..., -5.21105313, -6.87013638, -5.5506121 ]])

mfcc의 array의 dimension 확인

-기본적으로 20차 MFCC를 추출하도록 되어있음.

mfcc.shape

(20, 431)

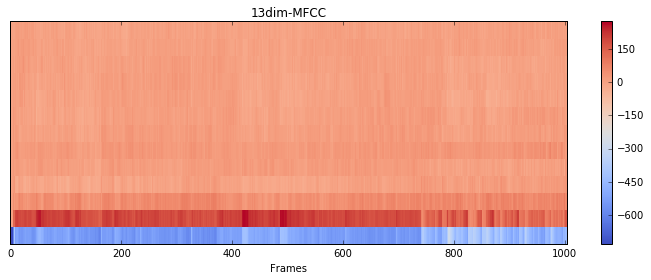

13차 mfcc 추출

- 20ms window, 10ms stride

mfcc = librosa.feature.mfcc(y=y, sr=sr,n_mfcc=13,

n_fft=int(0.02*sr),hop_length=int(0.01*sr))

mfcc.shape

(13, 1003)

np.transpose(mfcc).shape

(1003, 13)

np.transpose(mfcc)[5]

array([-616.80164198, 112.90440116, 36.59436053, 5.24560461, 8.94744557, 20.13686159, 32.53575185,

29.90577324, 11.01739721, 2.37518004, 7.64154294, 6.40403061, -1.77340686])

plt.figure(figsize=(10, 4))

librosa.display.specshow(mfcc, x_axis='frames')

plt.colorbar()

plt.title('13dim-MFCC')

plt.tight_layout()

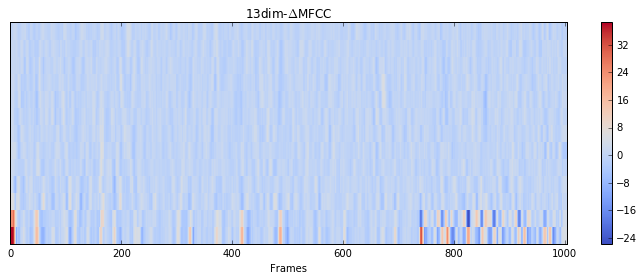

plt.figure(figsize=(10, 4))

delta=librosa.feature.delta(mfcc)

librosa.display.specshow(delta, x_axis='frames')

plt.colorbar()

plt.title('13dim-$\Delta$MFCC')

plt.tight_layout()

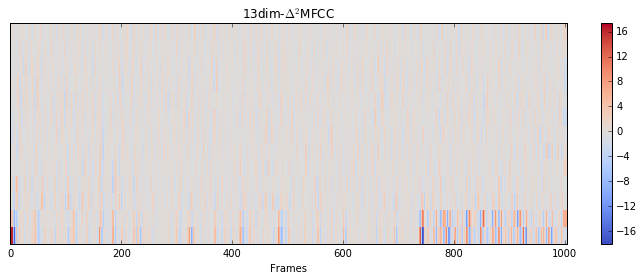

plt.figure(figsize=(10, 4))

delta2=librosa.feature.delta(mfcc,order=2)

librosa.display.specshow(delta2, x_axis='frames')

plt.colorbar()

plt.title('13dim-$\Delta^2$MFCC')

plt.tight_layout()

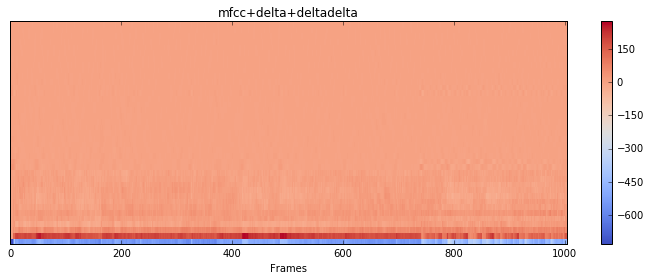

con_mfcc=np.concatenate((mfcc,delta,delta2),axis=0)

con_mfcc.shape

(39, 1003)

plt.figure(figsize=(10, 4))

librosa.display.specshow(con_mfcc, x_axis='frames')

plt.colorbar()

plt.title('mfcc+delta+deltadelta')

plt.tight_layout()

MNIST Classification

from keras.layers import Input, Dense

from keras.models import Model

from keras.datasets import mnist #MNIST dataset load

from keras.utils import np_utils

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Activation

(x_train,y_train),(x_test,y_test) = mnist.load_data()

print x_train.shape, x_test.shape , y_train.shape, y_test.shape

(60000, 28, 28) (10000, 28, 28) (60000,) (10000,)

print x_train[0].shape

(28, 28)

np.set_printoptions(linewidth=4*29)

print x_train[0]

[[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 3 18 18 18 126 136 175 26 166 255 247 127 0 0 0 0]

[ 0 0 0 0 0 0 0 0 30 36 94 154 170 253 253 253 253 253 225 172 253 242 195 64 0 0 0 0]

[ 0 0 0 0 0 0 0 49 238 253 253 253 253 253 253 253 253 251 93 82 82 56 39 0 0 0 0 0]

[ 0 0 0 0 0 0 0 18 219 253 253 253 253 253 198 182 247 241 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 80 156 107 253 253 205 11 0 43 154 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 14 1 154 253 90 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 139 253 190 2 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 11 190 253 70 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 35 241 225 160 108 1 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 81 240 253 253 119 25 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 45 186 253 253 150 27 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 16 93 252 253 187 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 249 253 249 64 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 46 130 183 253 253 207 2 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 39 148 229 253 253 253 250 182 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 24 114 221 253 253 253 253 201 78 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 23 66 213 253 253 253 253 198 81 2 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 18 171 219 253 253 253 253 195 80 9 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 55 172 226 253 253 253 253 244 133 11 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 136 253 253 253 212 135 132 16 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]]

plt.imshow(x_train[0]/255.0)

plt.gray()

plt.axis('off')

plt.show()

y_train[0]

5

#DATA normalize and reshape

x_train = x_train.reshape(60000,28*28).astype('float32') / 255.0

x_test = x_test.reshape(10000,28*28).astype('float32') / 255.0

x_train[0]

array([0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0.01176471,

0.07058824, 0.07058824, 0.07058824, 0.49411765, 0.53333336, 0.6862745 , 0.10196079, 0.6509804 , 1. ,

0.96862745, 0.49803922, 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0.11764706, 0.14117648, 0.36862746, 0.6039216 ,

0.6666667 , 0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.88235295, 0.6745098 , 0.99215686,

0.9490196 , 0.7647059 , 0.2509804 , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0.19215687, 0.93333334, 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.9843137 , 0.3647059 , 0.32156864,

0.32156864, 0.21960784, 0.15294118, 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0.07058824, 0.85882354, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.7764706 , 0.7137255 , 0.96862745, 0.94509804, 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0.3137255 ,

0.6117647 , 0.41960785, 0.99215686, 0.99215686, 0.8039216 , 0.04313726, 0. , 0.16862746, 0.6039216 ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0.05490196, 0.00392157, 0.6039216 , 0.99215686, 0.3529412 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.54509807, 0.99215686, 0.74509805, 0.00784314, 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0.04313726, 0.74509805, 0.99215686, 0.27450982,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0.13725491, 0.94509804,

0.88235295, 0.627451 , 0.42352942, 0.00392157, 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0.31764707, 0.9411765 , 0.99215686, 0.99215686, 0.46666667, 0.09803922, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.1764706 , 0.7294118 , 0.99215686, 0.99215686, 0.5882353 , 0.10588235, 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.0627451 , 0.3647059 , 0.9882353 , 0.99215686, 0.73333335,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0.9764706 , 0.99215686,

0.9764706 , 0.2509804 , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0.18039216, 0.50980395, 0.7176471 , 0.99215686,

0.99215686, 0.8117647 , 0.00784314, 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.15294118, 0.5803922 , 0.8980392 , 0.99215686, 0.99215686,

0.99215686, 0.98039216, 0.7137255 , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.09411765, 0.44705883, 0.8666667 , 0.99215686, 0.99215686, 0.99215686,

0.99215686, 0.7882353 , 0.30588236, 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.09019608, 0.25882354, 0.8352941 , 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.7764706 , 0.31764707, 0.00784314, 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0.07058824, 0.67058825, 0.85882354, 0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.7647059 ,

0.3137255 , 0.03529412, 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0.21568628, 0.6745098 , 0.8862745 , 0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.95686275, 0.52156866,

0.04313726, 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0.53333336, 0.99215686, 0.99215686, 0.99215686, 0.83137256, 0.5294118 , 0.5176471 , 0.0627451 ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. , 0. ,

0. ], dtype=float32)

y_train

array([5, 0, 4, ..., 5, 6, 8], dtype=uint8)

One hot encoding for training

y_train= np_utils.to_categorical(y_train)

## result

y_train

array([[0., 0., 0., ..., 0., 0., 0.],

[1., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 1., 0.]], dtype=float32)

y_train[0]

array([0., 0., 0., 0., 0., 1., 0., 0., 0., 0.], dtype=float32)

y_test = np_utils.to_categorical(y_test)

#Design Model for classification MNIST

input = 784

H1 = 512

H2= 512

O = 10

[출처 : https://github.com/wxs/keras-mnist-tutorial/raw/8824b7b56963a92ef879f09acd99cf3a210db2b8/figure.png ]

#input dimension은 이전 Layer의 output dimesion과 같이 해주면 됩니다.

model = Sequential()

model.add(Dense(units=512,input_dim=28*28,activation='relu'))

model.add(Dense(units=512,input_dim=512,activation='relu'))

model.add(Dense(units=10,activation='softmax'))

model.compile(loss='mean_squared_error',optimizer='sgd',metrics=['accuracy'])

hist = model.fit(x_train,y_train,epochs=10,batch_size=32)

Epoch 1/10

60000/60000 [==============================] - 10s 165us/step - loss: 0.0886 - acc: 0.2661

Epoch 2/10

60000/60000 [==============================] - 9s 156us/step - loss: 0.0828 - acc: 0.4897

Epoch 3/10

60000/60000 [==============================] - 10s 159us/step - loss: 0.0702 - acc: 0.5782

Epoch 4/10

60000/60000 [==============================] - 10s 160us/step - loss: 0.0516 - acc: 0.7253

Epoch 5/10

60000/60000 [==============================] - 10s 161us/step - loss: 0.0368 - acc: 0.8049

Epoch 6/10

60000/60000 [==============================] - 10s 160us/step - loss: 0.0280 - acc: 0.8525

Epoch 7/10

60000/60000 [==============================] - 10s 160us/step - loss: 0.0232 - acc: 0.8719

Epoch 8/10

60000/60000 [==============================] - 10s 159us/step - loss: 0.0204 - acc: 0.8830

Epoch 9/10

60000/60000 [==============================] - 10s 160us/step - loss: 0.0186 - acc: 0.8903

Epoch 10/10

60000/60000 [==============================] - 10s 160us/step - loss: 0.0173 - acc: 0.8967

model.evaluate(x_train,y_train)

60000/60000 [==============================] - 4s 61us/step

[0.016724505480378866, 0.8993333333333333]

model.evaluate(x_test,y_test)

10000/10000 [==============================] - 1s 61us/step

[0.015871783668361603, 0.9038]

from sklearn.metrics import confusion_matrix

model.predict(x_train)[0]

array([1.1833468e-02, 5.9616595e-04, 2.1906586e-02, 4.8277041e-01, 1.2570148e-04, 4.4862482e-01, 3.2751362e-03,

1.0664076e-02, 1.4754170e-02, 5.4494580e-03], dtype=float32)

model.predict_classes(x_train)

array([3, 0, 4, ..., 5, 6, 8])

model.predict_classes(x_test)

array([7, 2, 1, ..., 4, 8, 6])

y_test[0]

array([0., 0., 0., 0., 0., 0., 0., 1., 0., 0.], dtype=float32)

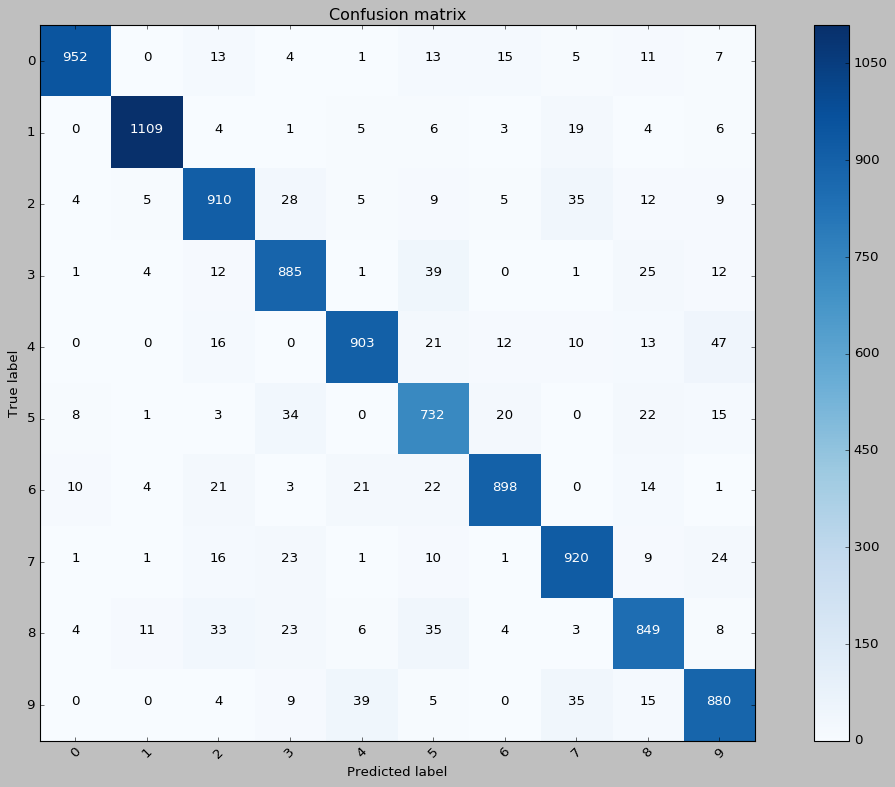

confusion_matrix(model.predict_classes(x_test),y_test.argmax(axis=1))

array([[ 952, 0, 13, 4, 1, 13, 15, 5, 11, 7],

[ 0, 1109, 4, 1, 5, 6, 3, 19, 4, 6],

[ 4, 5, 910, 28, 5, 9, 5, 35, 12, 9],

[ 1, 4, 12, 885, 1, 39, 0, 1, 25, 12],

[ 0, 0, 16, 0, 903, 21, 12, 10, 13, 47],

[ 8, 1, 3, 34, 0, 732, 20, 0, 22, 15],

[ 10, 4, 21, 3, 21, 22, 898, 0, 14, 1],

[ 1, 1, 16, 23, 1, 10, 1, 920, 9, 24],

[ 4, 11, 33, 23, 6, 35, 4, 3, 849, 8],

[ 0, 0, 4, 9, 39, 5, 0, 35, 15, 880]])

print plt.style.available

[u'seaborn-darkgrid', u'Solarize_Light2', u'seaborn-notebook', u'classic', u'seaborn-ticks', u'grayscale', u'bmh', u'seaborn-talk', u'dark_background', u'ggplot', u'fivethirtyeight', u'_classic_test', u'seaborn-colorblind', u'seaborn-deep', u'seaborn-whitegrid', u'seaborn-bright', u'seaborn-poster', u'seaborn-muted', u'seaborn-paper', u'seaborn-white', u'fast', u'seaborn-pastel', u'seaborn-dark', u'seaborn', u'seaborn-dark-palette']

import itertools

plt.style.use('classic')

def plot_confusion_matrix(cm, classes,

normalize=False,

title='Confusion matrix',

cmap=plt.cm.Blues):

"""

This function prints and plots the confusion matrix.

Normalization can be applied by setting `normalize=True`.

"""

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

print('Confusion matrix, without normalization')

#print(cm)

fig = plt.figure(figsize=(15,10))

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, format(cm[i, j], fmt),

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.tight_layout()

a = plot_confusion_matrix(confusion_matrix(model.predict_classes(x_test),y_test.argmax(axis=1)),range(0,10),normalize=False)

Confusion matrix, without normalization